In the current system, we use Tunnels to help connectivity in constrained environments. Specifically, we want a ring that has no broken connections and so we use Tunnels to ammeliorate that. There are two issues with Tunnels that I have been poking me. A) Connections support only a single Edge, so once we select an Edge type, we are stuck with it, regardless of how well it performs. Tunnels allow us to add Tunneling proxies, if a Tunnel occurs due to slow NAT traversal, we are stuck with it and this case has been observed! B) Tunnels require the routing logic to be duplicated inside of them. Now granted, the original design of Tunnels couldn't really have an optimized routing algorithm, but as we add more features like proximity routing, it is unenjoyable to maintain two sets of code. Especially one that is more difficult to properly test!

How to deal with A). Let's make X attempt to form direct edges if a Tunnel exists, if we are so lucky to create one, we'll replace the TunnelEdge in the Connection with a non-TunnelEdge. So we need to define who X should be. Another possibility is to let the Tunnel become a wrapper for a direct edge. This way the TunnelEdge[Listener] is responsible for a obtaining that direct connection.

B) is a little trickier, but I think the best approach is to strip from the Tunnel a list of edges to send on and just use standard routing to pass the message to the remote end point. This would be particularly useful if we knew all of our neighbors' neighbors. In fact, we could remove all of the sync in a Tunnel if we had this information. This just requires some leg work.

I reckon, once A and B are handled, using Tunnels for wide area communication won't be such an issue any more.

Tuesday, December 16, 2008

Tuesday, December 9, 2008

A Few Things To Ponder Regarding Future Networks

Why do so many in P2P and academics think that IPv6 will be the end of NATs and all to all connectivity will be restored? I think there a few fallacies with these comments. First and most importantly the purpose of most firewalls and NATs in businesses and education right now is to provide isolation so that all traffic must pass through a single entry point, be filtered, and then finally delivered. A good firewall should prevent a user from sending an incoming packet into a firewalled network until a machine inside that firewall has established a need for that connection. Given all the arguments for openness, how does this model work in the future? Are admins to leave all ports to internal machines open? Should there be a communication mechanism between the firewall and the users machine informing him that someone wants to communicate with him on such and such a port. If we make things more complicated, the lay user will be caught up in the storm. If listening to those not in this field about their difficulties has taught me one thing, its that things are not simple enough. Obviously there should be room for those who want to tweak things themselves, but adding complexity at the users' end will not make things easier. Since IPv6 has already been well defined, there needs to be some way to guarantee integrity between two points in a network and the only way to do that over the entire Internet is through the user of overlays. The overlay needs to have support for users who only want to make outgoing connections, the ability to find faults in the underlying network and route packets around it, the overlay needs to be self-organizing, the overlay could even potentially be used to handle traffic control such that optimal paths for latency and bandwidth are taken rather than static routes.

I am not all knowing, but I am certainly smart enough to realize that the solution to P2P and distributed computing will not be IPv6. It may help to some degree. The bottom line, however, is that we need to develop techniques that protect the user as much as possible while still proving the richest content.

The idea that if we send a message to our router, letting him know, hey we want to connect to so and so, let his messages through, has a nice ring to it. Will routers support it? That I do not know, but that only solves the problem of end to end connectivity in non-faulty systems. There is still the issue of faulty areas in the network and non-optimal routes.

Coming back... it appears this idea of using a third party to route already exists both in Skype and for Mobile IP. It is called Triangular Routing. Jeez... people are smart :).

I am not all knowing, but I am certainly smart enough to realize that the solution to P2P and distributed computing will not be IPv6. It may help to some degree. The bottom line, however, is that we need to develop techniques that protect the user as much as possible while still proving the richest content.

The idea that if we send a message to our router, letting him know, hey we want to connect to so and so, let his messages through, has a nice ring to it. Will routers support it? That I do not know, but that only solves the problem of end to end connectivity in non-faulty systems. There is still the issue of faulty areas in the network and non-optimal routes.

Coming back... it appears this idea of using a third party to route already exists both in Skype and for Mobile IP. It is called Triangular Routing. Jeez... people are smart :).

Labels:

P2P Research

Rounding Up the Research Results

So it appears velocity routing was handled 1-deep in the Can paper (but you have to look carefully at 3.3 to notice it) although this is for a hypercube and explored with synthetic numbers only. There implementation closely matches (if not identically) the method I proposed which boils down to "maximum ratio of progress to rtt". Then there is discussion of optimizing shortcuts by using latency and even the convergence of the two. Though his metrics really don't make much sense, I quote "To select the candidate set, the distance to the destination is expressed in binary notation, and neighbor i is chosen to the set if there is a 1 in the ith position." I have NO idea what that has to do with proximity routing and perhaps it is revealed in their results where they suggest that proximity routing has no advantage when used with proximity-based shortcuts. Finally, all the results are based on very synthetic benchmarks and I can easily lits many cases where PR definitely help PS, think of the case where I am surrounded by all but 1 low latency node, if a packet is incoming, it has a 1 / N chance of coming in on a low latency branch and (N-1)/N chance of not. Considering that routes in a stable system are static, that could lead to very poor performance! So while PS helps traversing the ring faster, PR helps when we're getting closer. Perhaps there is a way to reconcile this.

So what is left undone: we have a system where there are 2*K near neighbors, when we are routing nearby, most likely the packet will be routed through one of them. If we make the assumption that each node also has at miniumum 2*K neighbors we can approximate how many hops it would take for this packet to arrive at the remote node. I propose that we may see a reduction in hops and latency if we come up with some heuristic to evaluate our latency to our neighbors and the potential for increased hop count and make a routing decision based upon that. If we know our neighbors, neighbors and their latency to them, we could further develop this routing mechanism to bring us even closer.

What makes this relevant? Well I guess that will be another post.

So what is left undone: we have a system where there are 2*K near neighbors, when we are routing nearby, most likely the packet will be routed through one of them. If we make the assumption that each node also has at miniumum 2*K neighbors we can approximate how many hops it would take for this packet to arrive at the remote node. I propose that we may see a reduction in hops and latency if we come up with some heuristic to evaluate our latency to our neighbors and the potential for increased hop count and make a routing decision based upon that. If we know our neighbors, neighbors and their latency to them, we could further develop this routing mechanism to bring us even closer.

What makes this relevant? Well I guess that will be another post.

Labels:

P2P Research

Organizing My Thoughts

Gosh, what an awful feeling. After 3 years of tackling issue after issue, I have realized I am dumb. I have trouble justify the work I have been doing so far as anything remotely mathematically scientific, perhaps, it is scientific in terms of usability, but given that it cannot be boxed up and mathematically analyzed leaves me bummed. Perhaps it stems from my mistake 7 years ago in not properly preparing for math curriculum that made me woefully ill prepared to handle and understand proofs coming from a eccentric but enlightened professor. Either way, my issues have culminated into something difficult to grasp, to which I say "Gosh, what an awful feeling."

So where does that leave me? I suppose I need to look at a few angles here, I am definitely doing some useful work in terms of fixing our problem: forming almost direct shortcuts with remote peers. I think that has to be the bottom line from all my research I am doing now (I'll do a follow up post on that following this). More importantly, long term, to fulfill my thirst and desire, I need to discover my own problem or issue and make that a topic of interest. If I don't, I will be stuck in a Ph.D. of mediocracy. That WOULD be a waste.

So where does that leave me? I suppose I need to look at a few angles here, I am definitely doing some useful work in terms of fixing our problem: forming almost direct shortcuts with remote peers. I think that has to be the bottom line from all my research I am doing now (I'll do a follow up post on that following this). More importantly, long term, to fulfill my thirst and desire, I need to discover my own problem or issue and make that a topic of interest. If I don't, I will be stuck in a Ph.D. of mediocracy. That WOULD be a waste.

Labels:

Ph.D. Research

My current thought is this... before we attempt to form intermediate shortcuts with remote entities, we should attempt to find the best path to that entity. Once we have the best path, it should be natural that if any of those hops were to form intermediate shortcuts that it should only make things faster. Now if the triangulation were violated, we would need to continually optimize our route and not assume it is ever optimal, but in only a way such that it does not interefere with traffic.

Some key things that need to be figured out, when do we attempt to find the best path between two points, when do we believe we have an optimal enough path such that we should begin forming intermediate shortcuts?

Perhaps a simple metric is this, when a direct shortcut has been attempted we should also begin finding the optimal path.

Some key things that need to be figured out, when do we attempt to find the best path between two points, when do we believe we have an optimal enough path such that we should begin forming intermediate shortcuts?

Perhaps a simple metric is this, when a direct shortcut has been attempted we should also begin finding the optimal path.

Labels:

Uncategorized

Saturday, December 6, 2008

Understanding Vivaldi

Subtitled: Or so I hope!

As I mentioned before, in our code, we have Vivaldi, but the code was rather complex especially if you look at where (I think) it comes from this paper and this code. I could not reconcile what was happening! One thing I must recommend to anyone that is working on a group project or wants their work to continue after you leave, make sure the code you share is clean and document the algorithm (regardless of complexity). If the code isn't clean, it may be difficult or impossible to understand the code. If the algorithm isn't documented, you leave it up to the next guy to attempt to understand what you were doing and heaven forbid you did something non-standard try to figure out why. No one is immune, I compared the Vivaldi paper and code and they are very different but it is nowhere documented why they made those changes!

Anyway onto how I understand Vivaldi.

Each point has position X and error E set to [0, ..., 0] and 1 respectively.

at node i: ProcessSample(Xj, Ej, latency_between_ij)

- distance = EuclideanDistance(Xi, Xj)

- relative_error = (latency - distance) / latency

// We square to give less weight to high error node

- le = Ei^2

- re = Ej^2

- new_error = relative_error * (le / (re + le)) + Ei * (re / (le + re))

- Ei = (19 * Ei + new_error) / 20

- SetInBounds(Ei, 0, 1)

- ForceVector = Xi - Xj

- if(PlaneDirection(ForceVector) < .0001)

- - ForceVector.Bump (just to move it a little from the origin)

- length = Direction(ForceVector)

- unit = 1.0 / length

- weight = .1 * (Ei /(Ei + Ej))

- ForceVector * (unit * weight * (latency - distance))

- Xi += ForceVector

- SetInBounds(Xi.Height, 100, 10000)

So to clarify some terms Euclidean Distance is ||Xi - Xj|| + Xi.Height + Xj.Height

Xi - Xj = Xi.Coords - Xj.Coords, Xi.Height + Xj.Height

SetInBounds ensure that the system stays stable

This differs from the paper by using a exponential weighted moving average instead of static dampers on the error and the introduction of the Bump also generation of the unit vector (in my mind) was a bit hazy. This differs from the code in that the ForceVector is Xi - Xj instead of Xj - Xi, the latter would cause explosions since it actually moved us closer when we wanted to move away and away when we wanted to move closer. Also the code had no notion of limiting the height, but in my experiments I have seen it explode. Another strange feature in the code was the inverting of the ForceVector.Height, which I found when not doing improved results. Sadly all my effort seems to be in vain as the results are similar to what was presented in the paper.

The next step is to integrate this with the Graph and use it to test potential shortcut optimizations we can make. Interestingly, the king data set does not work that well with Vivaldi (> 70% of all to all pairs are within 15% of being accurate), but that will not deter my efforts!

As I mentioned before, in our code, we have Vivaldi, but the code was rather complex especially if you look at where (I think) it comes from this paper and this code. I could not reconcile what was happening! One thing I must recommend to anyone that is working on a group project or wants their work to continue after you leave, make sure the code you share is clean and document the algorithm (regardless of complexity). If the code isn't clean, it may be difficult or impossible to understand the code. If the algorithm isn't documented, you leave it up to the next guy to attempt to understand what you were doing and heaven forbid you did something non-standard try to figure out why. No one is immune, I compared the Vivaldi paper and code and they are very different but it is nowhere documented why they made those changes!

Anyway onto how I understand Vivaldi.

Each point has position X and error E set to [0, ..., 0] and 1 respectively.

at node i: ProcessSample(Xj, Ej, latency_between_ij)

- distance = EuclideanDistance(Xi, Xj)

- relative_error = (latency - distance) / latency

// We square to give less weight to high error node

- le = Ei^2

- re = Ej^2

- new_error = relative_error * (le / (re + le)) + Ei * (re / (le + re))

- Ei = (19 * Ei + new_error) / 20

- SetInBounds(Ei, 0, 1)

- ForceVector = Xi - Xj

- if(PlaneDirection(ForceVector) < .0001)

- - ForceVector.Bump (just to move it a little from the origin)

- length = Direction(ForceVector)

- unit = 1.0 / length

- weight = .1 * (Ei /(Ei + Ej))

- ForceVector * (unit * weight * (latency - distance))

- Xi += ForceVector

- SetInBounds(Xi.Height, 100, 10000)

So to clarify some terms Euclidean Distance is ||Xi - Xj|| + Xi.Height + Xj.Height

Xi - Xj = Xi.Coords - Xj.Coords, Xi.Height + Xj.Height

SetInBounds ensure that the system stays stable

This differs from the paper by using a exponential weighted moving average instead of static dampers on the error and the introduction of the Bump also generation of the unit vector (in my mind) was a bit hazy. This differs from the code in that the ForceVector is Xi - Xj instead of Xj - Xi, the latter would cause explosions since it actually moved us closer when we wanted to move away and away when we wanted to move closer. Also the code had no notion of limiting the height, but in my experiments I have seen it explode. Another strange feature in the code was the inverting of the ForceVector.Height, which I found when not doing improved results. Sadly all my effort seems to be in vain as the results are similar to what was presented in the paper.

The next step is to integrate this with the Graph and use it to test potential shortcut optimizations we can make. Interestingly, the king data set does not work that well with Vivaldi (> 70% of all to all pairs are within 15% of being accurate), but that will not deter my efforts!

Labels:

P2P Research

Friday, December 5, 2008

How Things Fit Together

My current thought is this... before we attempt to form intermediate shortcuts with remote entities, we should attempt to find the best path to that entity. Once we have the best path, it should be natural that if any of those hops were to form intermediate shortcuts that it should only make things faster. Now if the triangulation were violated, we would need to continually optimize our route and not assume it is ever optimal, but in only a way such that it does not interefere with traffic.

Some key things that need to be figured out, when do we attempt to find the best path between two points, when do we believe we have an optimal enough path such that we should begin forming intermediate shortcuts?

Perhaps a simple metric is this, when a direct shortcut has been attempted we should also begin finding the optimal path.

Some key things that need to be figured out, when do we attempt to find the best path between two points, when do we believe we have an optimal enough path such that we should begin forming intermediate shortcuts?

Perhaps a simple metric is this, when a direct shortcut has been attempted we should also begin finding the optimal path.

Labels:

P2P Research

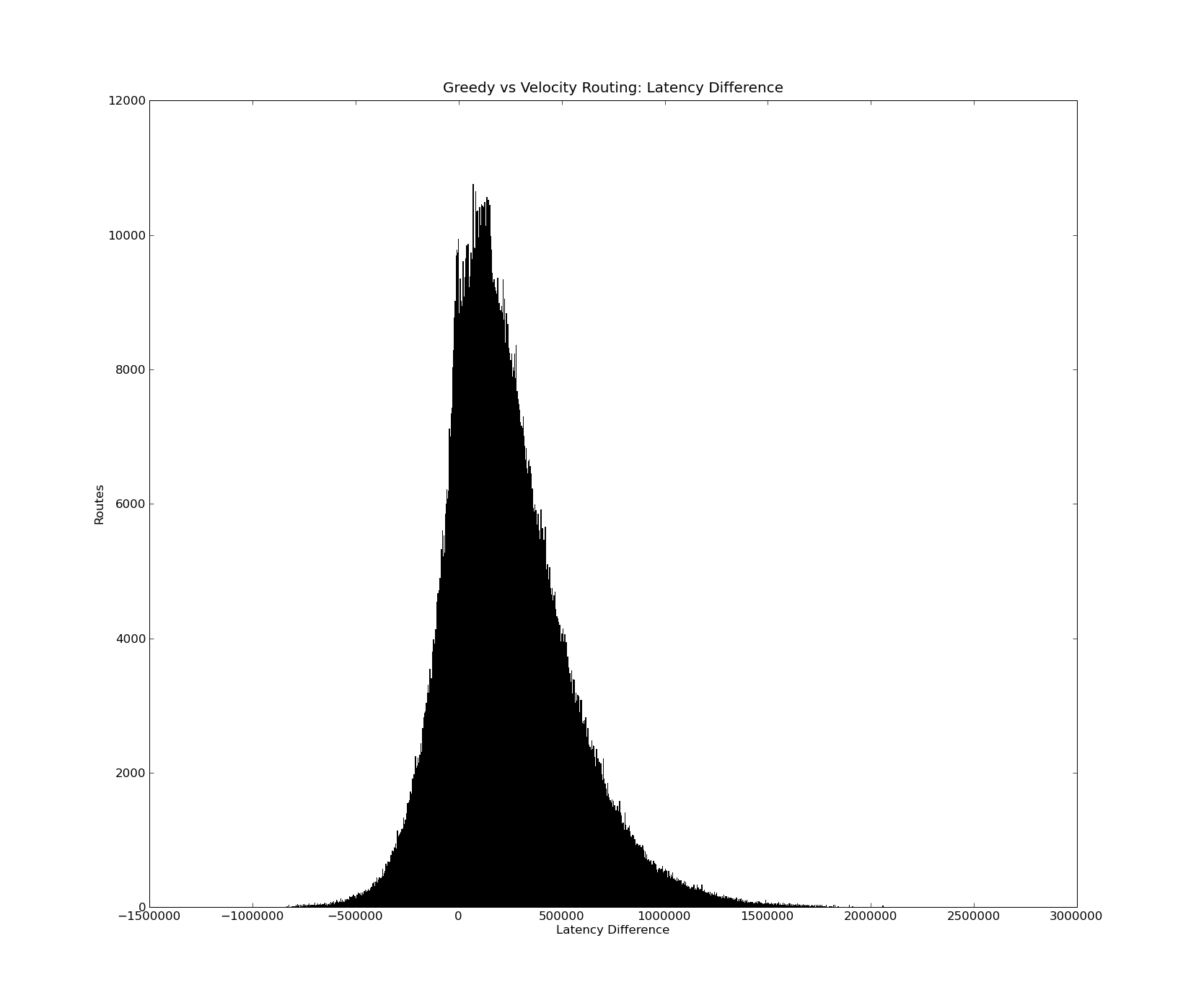

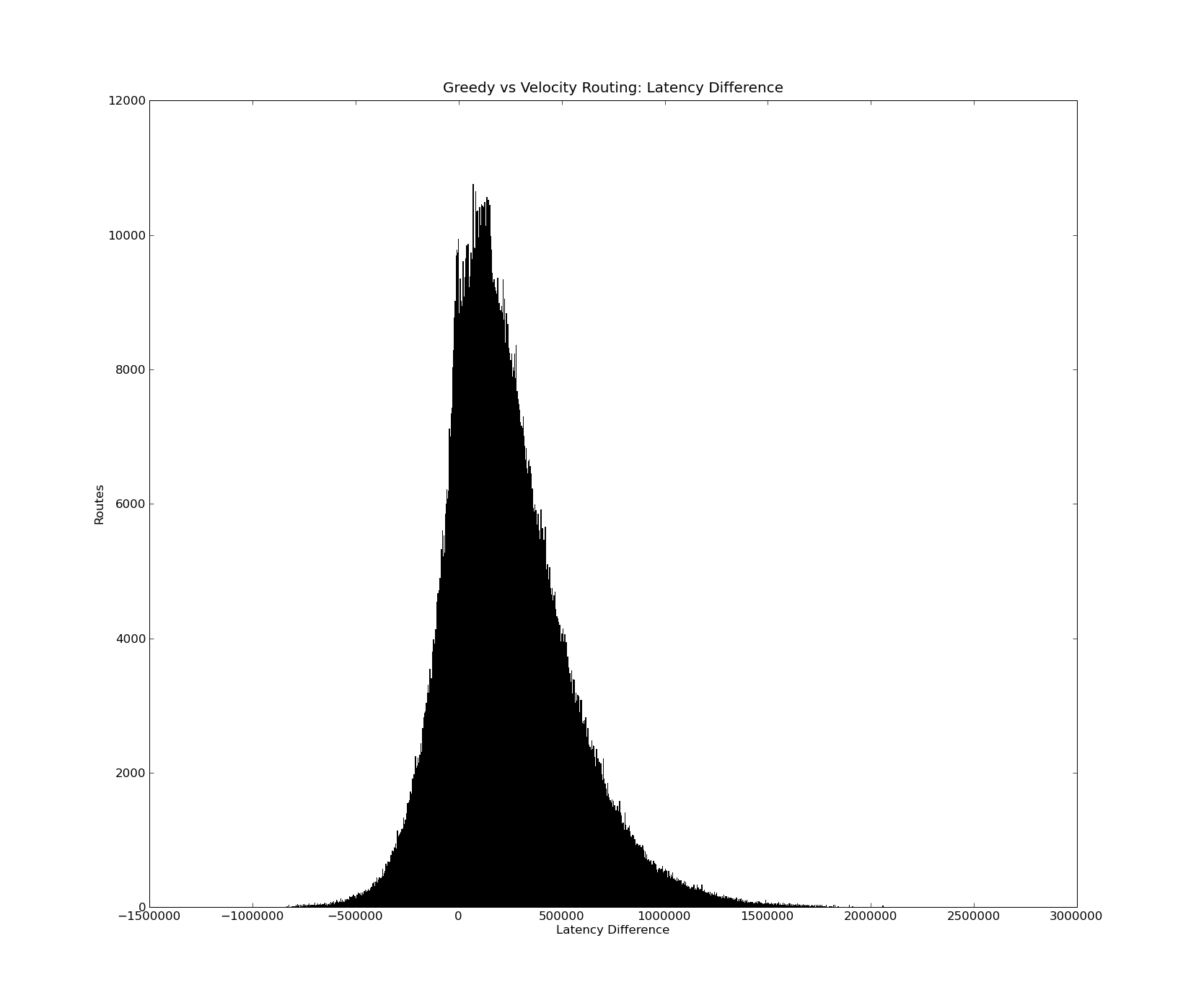

Initial Results for Routing

Although I did run tests for optimizing TunnelEdges based upon latency, I'm going to ignore those results for now. Also note, this work does not build upon any of the work done by the Vivaldi coordinates and simply requires nodes to know the latency between each other. Using the king data set here are the results for 1740 all to all single path.

Same: 440337

Better: Count: 2057229 Mean: 319291.72213 Stdev: 257587.511895

Worse: Count: 530034 Mean: -122263.274852 Stdev: 115704.074039

Val1: Mean: 560729.370171 Stdev: 299076.214084

Val2: Mean: 365177.613796 Stdev: 169037.922021

So overall it looks pretty promising, better beats worse 4:1 with an average decrease of 320 ms vs average increase of 122 ms. Val1 is the greedy routing while Val2 is velocity routing.

Now for the pretty histogram of the differences! I suppose the next step may be to isolate the cases where large loss is found and see if we can come up with a solution to avoid that while not taking too much away from the really positive gains. The worst loss is half the best gain!

Same: 440337

Better: Count: 2057229 Mean: 319291.72213 Stdev: 257587.511895

Worse: Count: 530034 Mean: -122263.274852 Stdev: 115704.074039

Val1: Mean: 560729.370171 Stdev: 299076.214084

Val2: Mean: 365177.613796 Stdev: 169037.922021

So overall it looks pretty promising, better beats worse 4:1 with an average decrease of 320 ms vs average increase of 122 ms. Val1 is the greedy routing while Val2 is velocity routing.

Now for the pretty histogram of the differences! I suppose the next step may be to isolate the cases where large loss is found and see if we can come up with a solution to avoid that while not taking too much away from the really positive gains. The worst loss is half the best gain!

Labels:

P2P Research

Thursday, December 4, 2008

Something Else To Consider

Recent work with a fellow lab in the Archer project reminded me of a problem we may encounter more in the future, labs tend to be behind firewalls that only allow TCP in/out. This is premise for my current work: Improving Overlay Performance in Constrained Networks, but to assist in this problem, I want to look into having a single routing machine for a cluster behind one of these firewalls. This would reduce the amount of constrained nodes in the network and make it easy for users to create non-Grid Appliance deployments without having to deploy IPOP on a single physical host. This is similar to work done by a fellow lab-mate, that work is called ViNe.

Labels:

Ph.D. Research

Tests Cases For Improving Overlay Performance in Constrained Networks

What we want to test: latency, bandwidth, and packet failure rate

We can test latency using existing projects such as the Brunet.Graph and the Brunet.Simulator. Since we have no information on packet failure rate testing this on these two products becomes somewhat tricky, but I could look up discussion on failure rate theory and/or use a sliding metric.

Bandwidth tests require more real systems. We currently have a student attempting to install NistNAT on a virtual machine and we could also do wide-area tests involving planetlab.

Finally, we would want a full system test involving a test of our real system (Grid Appliance or SocialVPN) and gather statistics using them.

So the next thought is to determine the test metrics. I would suppose the best mechanism for test would be to look at individual points to determine the amount of connections that perform better, worse, or stay the same given a specific optimization.

So before my imagination gets any better of me, I need to take a step back and add those features to the system. At which point, I can start discussing the addition of these new optimizations.

We can test latency using existing projects such as the Brunet.Graph and the Brunet.Simulator. Since we have no information on packet failure rate testing this on these two products becomes somewhat tricky, but I could look up discussion on failure rate theory and/or use a sliding metric.

Bandwidth tests require more real systems. We currently have a student attempting to install NistNAT on a virtual machine and we could also do wide-area tests involving planetlab.

Finally, we would want a full system test involving a test of our real system (Grid Appliance or SocialVPN) and gather statistics using them.

So the next thought is to determine the test metrics. I would suppose the best mechanism for test would be to look at individual points to determine the amount of connections that perform better, worse, or stay the same given a specific optimization.

So before my imagination gets any better of me, I need to take a step back and add those features to the system. At which point, I can start discussing the addition of these new optimizations.

Labels:

P2P Research

Improving Overlay Performance in Constrained Networks

Background: Our work currently focuses on using structured p2p overlay networks (Brunet) for routing packets for our distributed VPN software (IPOP). Routing small control data through the overlay is fine, but when a user wants to perform bandwidth intensive tasks, routing through the overlay can perform worse than a direct connection to the remote end point.

Problem: In constrained networks, forming direct connections between two peers may not be possible. How do we improve this situation? Secondly, in our current implementation of forming direct connections, we basically form a direct connection with anyone we have IP traffic with, this will not scale well.

Related Work: Improving routing using user-selected supernodes - SBARC, improving bandwidth with BitTorrent - Ono, best path detection - Ant routing

What We Have Now: Currently in our system we have a Vivaldi system (that is a bit heavy and could possibly use a rewrite) which could potentially be turned into a generic system information database that also includes Vivaldi coordinates and latency between two edges. The ability to select shortcuts based upon physical (latency-based) proximity. Velocity based routing, which is a greedy-like routing algorithm that takes latency into consideration.

Deadline: Ideally, we want to have a paper at ICAC '09 with a deadline of 01/19/09 giving a mere 1.5 months to get something wrapped up!

Possible Solution: A recently graduated colleague of mine did some initial work on solving this problem. His solution was to have the two end points attempt to discover mutually agreeable proxies and then form a tunnel edge over them. There were two methods to discover proxies: search the Vivaldi coordinates of the system to find good candidates or use existing neighbors of the two nodes. This work has a direct relation to supernodes paper mentioned above with the exception that it is somewhat autonomous.

The problems are a few problems with this approach. By forming a Tunnel edge between the two end points, we move routing decisions away from the routing algorithms and into the Tunnel edge. The basic premise is that if we do not have a connection then we are not performing optimally, this may be untrue! There is no control logic on how many proxy connections we form and connections are not free.

What looks like the best solution is to take this initial work, add ant like capabilties to the system so that we don't create unnecessary optimizations, only make direct connections and only have routing logic in the routers, and to move users towards velocity routing. The question is where does the Ant go in this problem and personally, I would think we would want the Routers (or data handlers) to communicate with the Ant processor that way we can ignore packets that are delivered locally and do not have to double parse a packet.

Potential Issues: Should we prequalify the velocity routing prior to this paper? Perhaps we should potentially simultaneously publish a workshop paper containing the velocity routing at the same time we do the ICAC paper? This has the potential to conflict with the recent graduates plans for writing a workshop paper discussing shortcut-tunneling, but given that he left over 4 months ago and that we have a test environment to do this in and a need for it, I would like to get it moving!

Next Step: Design environments for testing what we have now followed by implementing tests for these theories.

Problem: In constrained networks, forming direct connections between two peers may not be possible. How do we improve this situation? Secondly, in our current implementation of forming direct connections, we basically form a direct connection with anyone we have IP traffic with, this will not scale well.

Related Work: Improving routing using user-selected supernodes - SBARC, improving bandwidth with BitTorrent - Ono, best path detection - Ant routing

What We Have Now: Currently in our system we have a Vivaldi system (that is a bit heavy and could possibly use a rewrite) which could potentially be turned into a generic system information database that also includes Vivaldi coordinates and latency between two edges. The ability to select shortcuts based upon physical (latency-based) proximity. Velocity based routing, which is a greedy-like routing algorithm that takes latency into consideration.

Deadline: Ideally, we want to have a paper at ICAC '09 with a deadline of 01/19/09 giving a mere 1.5 months to get something wrapped up!

Possible Solution: A recently graduated colleague of mine did some initial work on solving this problem. His solution was to have the two end points attempt to discover mutually agreeable proxies and then form a tunnel edge over them. There were two methods to discover proxies: search the Vivaldi coordinates of the system to find good candidates or use existing neighbors of the two nodes. This work has a direct relation to supernodes paper mentioned above with the exception that it is somewhat autonomous.

The problems are a few problems with this approach. By forming a Tunnel edge between the two end points, we move routing decisions away from the routing algorithms and into the Tunnel edge. The basic premise is that if we do not have a connection then we are not performing optimally, this may be untrue! There is no control logic on how many proxy connections we form and connections are not free.

What looks like the best solution is to take this initial work, add ant like capabilties to the system so that we don't create unnecessary optimizations, only make direct connections and only have routing logic in the routers, and to move users towards velocity routing. The question is where does the Ant go in this problem and personally, I would think we would want the Routers (or data handlers) to communicate with the Ant processor that way we can ignore packets that are delivered locally and do not have to double parse a packet.

Potential Issues: Should we prequalify the velocity routing prior to this paper? Perhaps we should potentially simultaneously publish a workshop paper containing the velocity routing at the same time we do the ICAC paper? This has the potential to conflict with the recent graduates plans for writing a workshop paper discussing shortcut-tunneling, but given that he left over 4 months ago and that we have a test environment to do this in and a need for it, I would like to get it moving!

Next Step: Design environments for testing what we have now followed by implementing tests for these theories.

Labels:

P2P Research

Subscribe to:

Posts (Atom)